REGIONS

by DARYL ANSELMO

— JUNE 2024

ABSTRACT

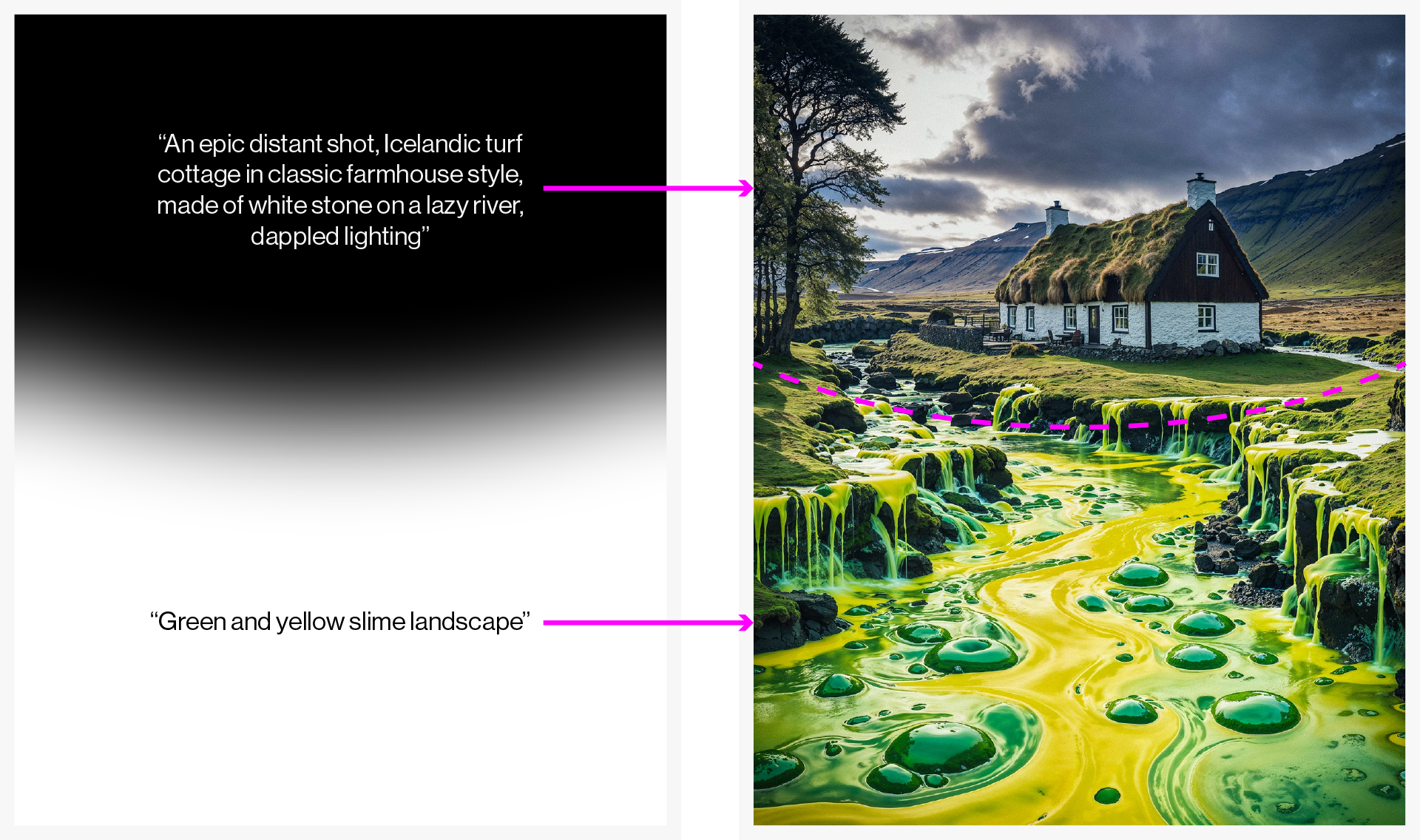

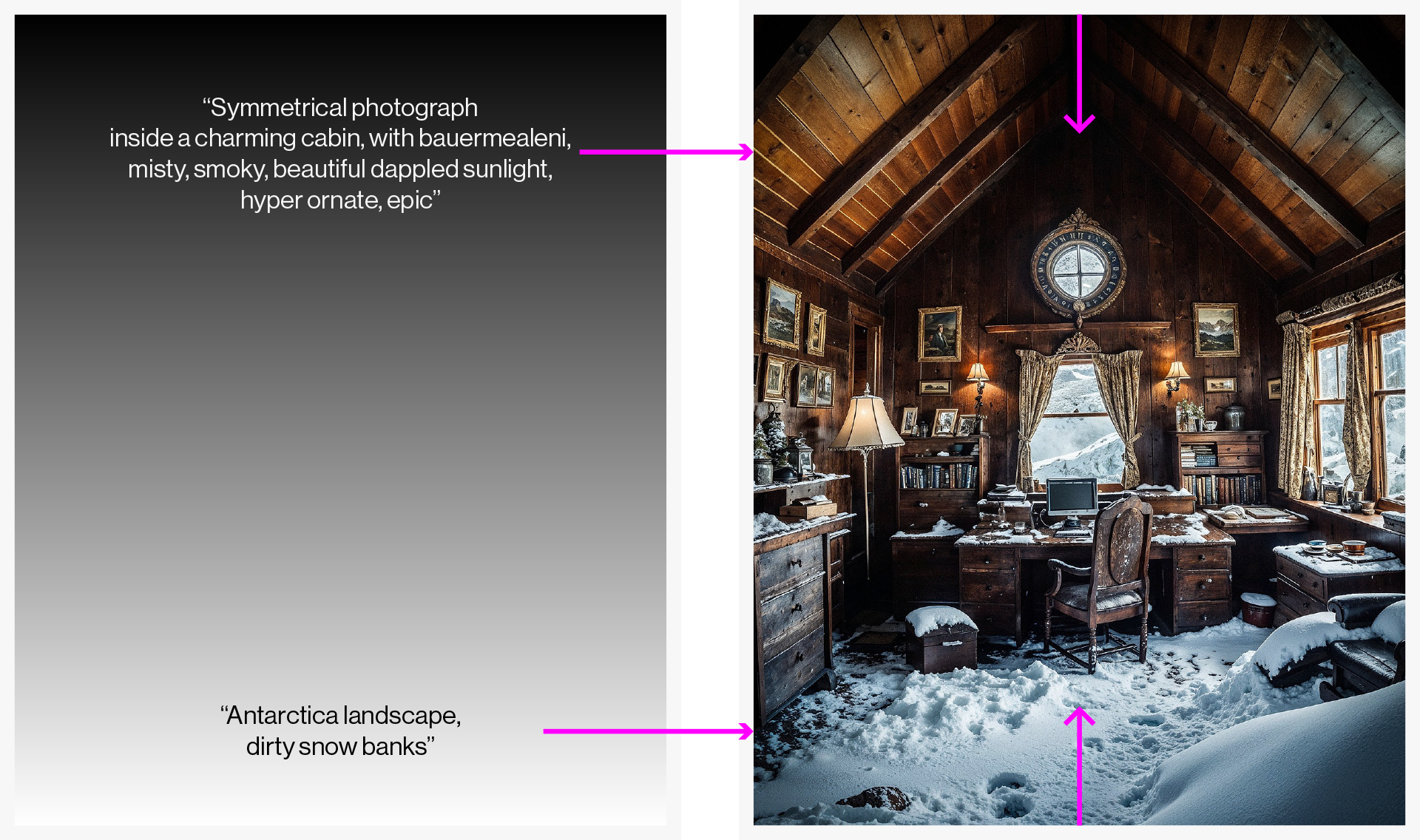

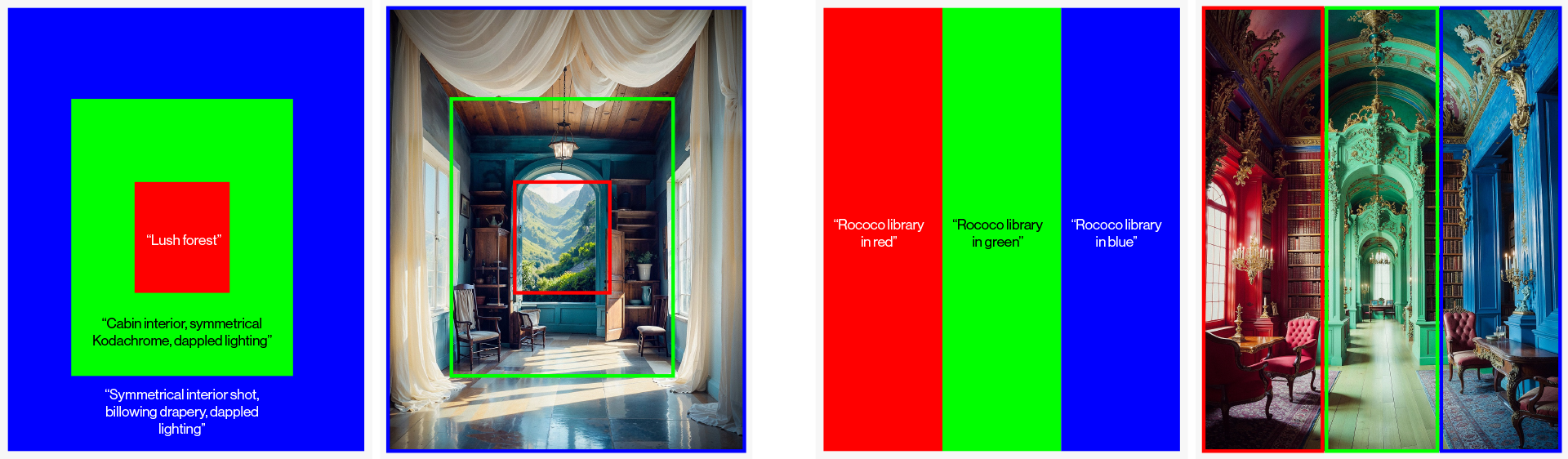

From tape to stencils, and friskets to dodging - masking techniques have been widely used across all forms of traditional image production for centuries, allowing artists to protect certain parts of an image, while isolating others for specific changes.

For AI to be useful as a production tool, precise artistic control is required. While inpainting and outpainting are now common techniques, specifying distinct regions to be diffused with different visual information, up-front, is powerful and not as widely practiced.

For his 10th consecutive 13-week deep-dive, 'Regions', Anselmo focuses his latest exploration on the application of these methods to control image diffusion.

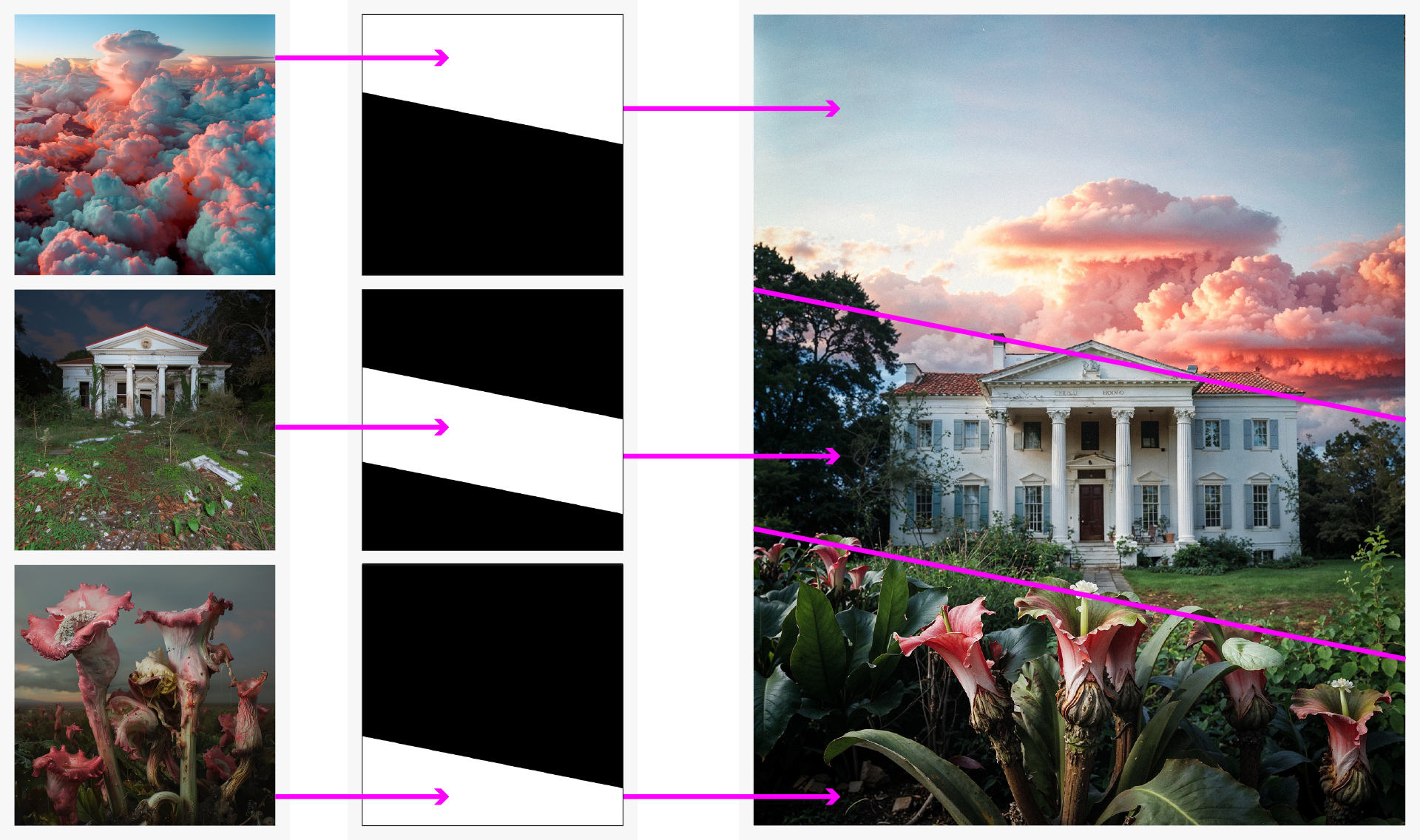

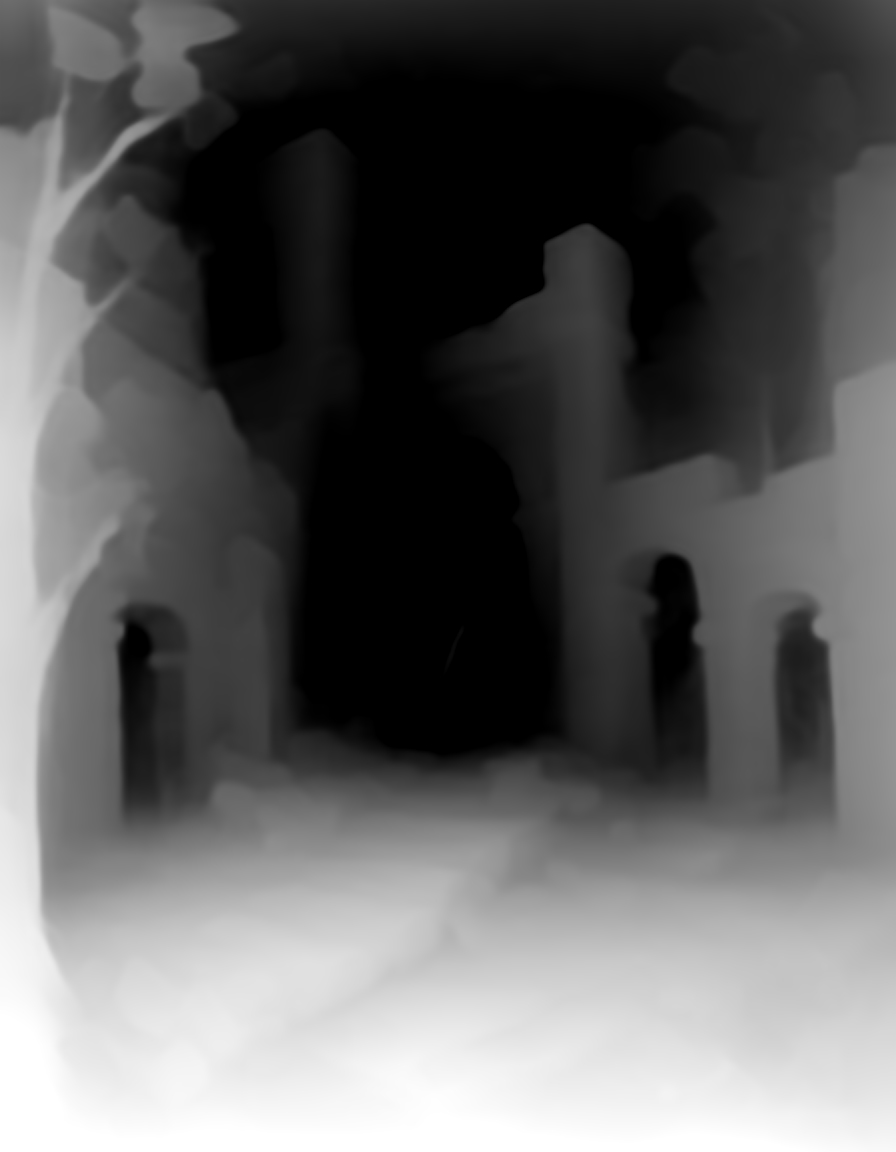

Fig 1.1: unsettling greek revival, with pink clouds and corpse flowers - June 23, 2024

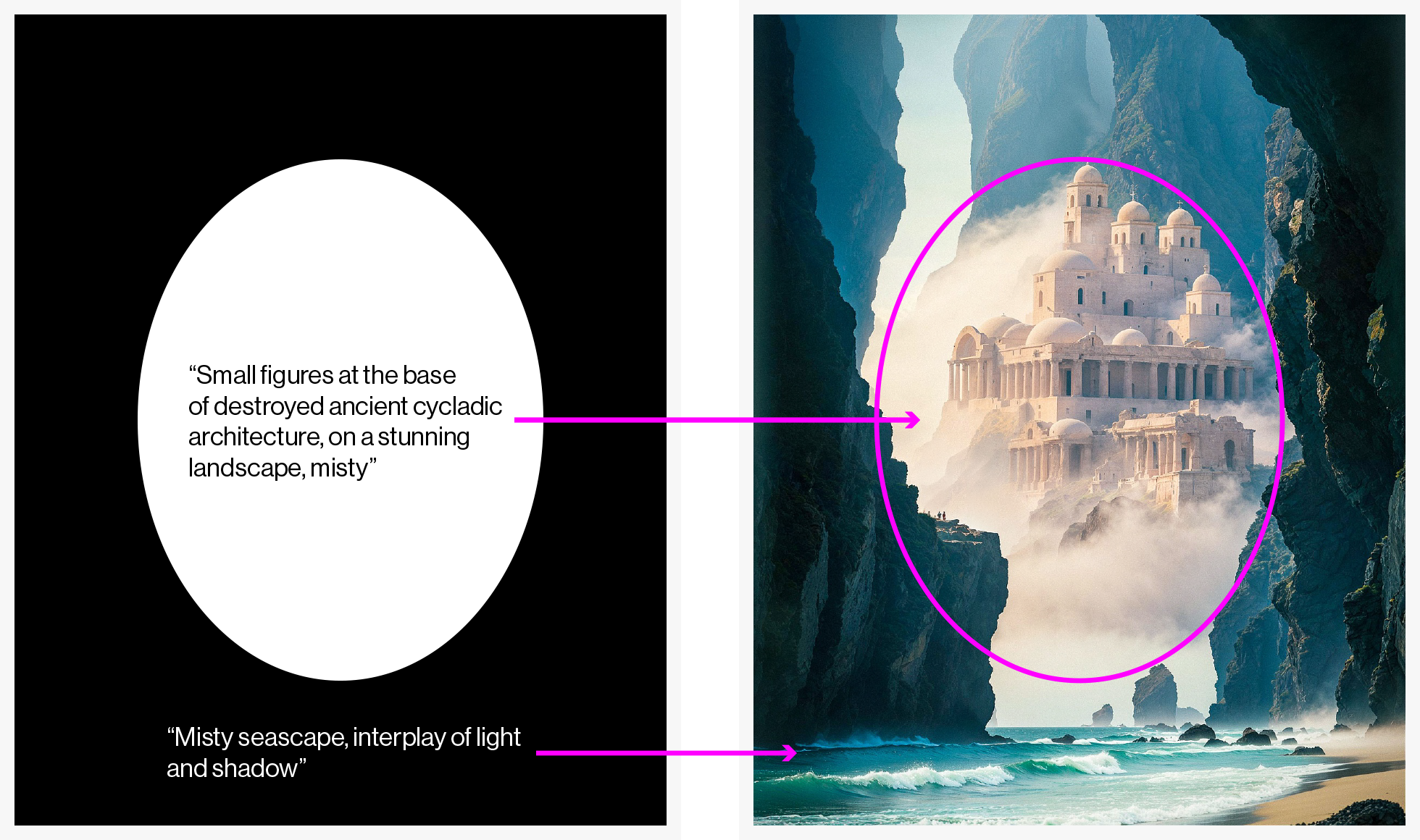

Fig 1.2: misty seascapes are vignetted around cycladic ruins - May 5, 2024

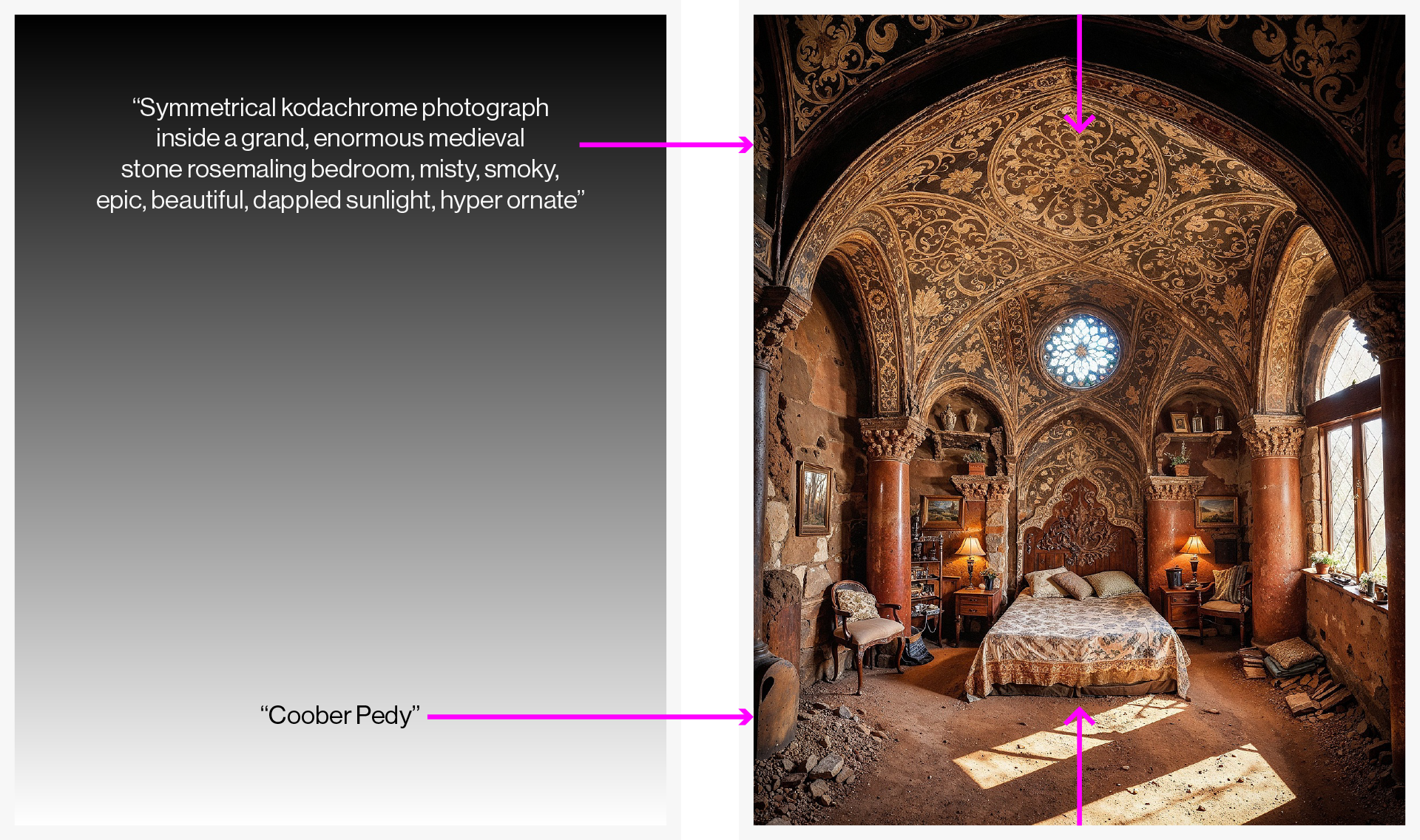

Fig 1.3: a vertical gradient of medieval rosemaling over coober pedy - May 21, 2024